5 lessons I learnt during an AI hackathon

What's it like to be a quality engineer on a team developing an AI product?

Recently, I was lucky enough to participate in an AI hackathon at work. We were split into teams, given a problem to solve and had two working days to develop a pitch for our solution and a working prototype. I’m not going to focus too much on our challenge and solution, but I wanted to share some reflections from a Quality Engineers perspective on the two fantastic days of hacking.

1. Slicing the product up and experimenting

Given my experience with prompt engineering that has gone into my upcoming bookAI-Assisted Testing, I found myself drawn towards the prompt engineering portion of our product. Our prompts and the LLM we were using were to be part of a bigger application. So whilst the larger application was being we built ourselves an experimentation harness that allowed us to create and tweak our prompt with a large test dataset to evaluate the prompt’s performance. We would then add the prompt into the final application once it was built safe in the knowledge that our prompt had had some decent evaluation before it was added in.

As we experimented I was reminded of one of Andrew Ng’s letters Building Machine Learning Systems is More Debugging Than Development. In the letter, he explains how machine learning software has a much shorter initial development timescale but requires much more debugging and improvement. This was also my experience of our prompt experimentation. Using LangChain, we were able to build our harness in next to no time, but the tweaking and evaluating of the prompt was much longer. That wasn’t to say it wasn’t fun though. I enjoyed the process of making small tweaks to the prompt, starting the harness, running test data through the prompt and LLM and evaluating if the outputs had improved or not.

To be honest, this idea of slicing out the prompt portion of the application and evaluating it in parallel to the other didn’t come to mind straight away. It took some prompting (no pun intended) from a teammate to make me realise that this was possible. However, if we as quality engineers want to encourage teams to develop high-quality, ‘AI-driven’ applications, then we have to be comfortable with slicing our products into distinct parts so that the way we evaluate and test them is relevant to the slice of the product we’re working on. Attempting to run rapid evaluations against a full-stack version of the application would have been cumbersome and slow.

2. Bad data can be as vital as good data

It may come as no surprise to you that in the world of AI data is key. Going into the hackathon I knew that data was going to be important, but what I didn’t quite appreciate is just how much the quality of the data mattered to us in terms of evaluating success. When I normally think about the quality of a data set, I am thinking about whether it is enriched with the correct parameters, whether is it free from corruption, whether is it relevant to the context, etc. But what became apparent to me very early on was that in our context we wanted both good data and bad data to help us evaluate the LLM-based parts of our solution.

Our prototype was built to help teams rapidly improve data that was initially in a bad state and ultimately convert it into better, or more acceptable, data. This meant to evaluate our product we had to collect as much bad data as possible that we could use both in our few shot examples within our prompt, as well as for evaluating the prompt itself. The idea was that we could pass it the bad data, and then look at the output and determine if it was better or not. The challenge was that although we had been provided with a slide deck that demonstrated some examples of bad data, all of that had been cleaned manually before we started. Meaning that we had very little in the way of bad data to use to help bolster our prompt.

It makes sense that we, as a business, want to improve our products and data. We don’t want to sit around with bugs and data issues, losing business, until someone has enough bad data in place to start work on a solution. However, without that initial problematic data to ground your prompt we either have to spend valuable time on locating further bad data or we have to synthesise it. The latter is useful to help move a project forward but has its inherent risks as it is a facsimile of the problem space we want to solve and not the real thing.

3. The business decides what is good, not the AI

Fortunately, we were able to find a few examples to feed into our prompt and our evaluation process (as we only needed a small amount for our prototype). We extracted some examples from live data which were useful candidates for both evaluating success and demonstrating the value of our prototype. The issue was, what did a positive output look like?

The output that was to be created by the LLM was rooted in a domain that relies as much on a domain expert’s intuition as it does on specific rules that needed to be followed. With deterministic systems, we wouldn’t necessarily need to worry about this. The domain expert would likely provide a few examples of expected outcomes and we could then run our bad data through our system and compare our output to the expected outcomes. However, given we were using an LLM the outputs were always going to be different. And what’s more, the differences were subtle. To the untrained eye, we could have run our evaluation ten times and got what we believed to be ten acceptable results. However, the domain expert would have intrinsic knowledge that would allow them to spot subtle discrepancies that we couldn’t.

Given we had two days, and the people we were building this prototype for had work to do, we opted to rely on tools that would analyse the output for us. But if this was a serious project we would likely need to bring the business in closer, perhaps much closer than with a more traditional project, to guide us on what good looked like. Hamel Husain talks about this in detail in his post “Your AI Product Needs Evals” https://hamel.dev/blog/posts/evals/#level-2-human-model-eval and I was surprised just how quickly we needed that feedback loop in place.

4. Just because it’s indeterministic doesn’t mean it doesn’t need tests

When I discuss the challenges of testing AI with others there is always a sense that traditional methods of testing are redundant and ineffective. However, as we built out our product, I began to question this thinking a little. Sure, indeterministic systems do mean that we can’t have a one-to-one relationship of requirement = expected outcome = test. But when working with LLMs we still want to apply a certain level of structure and we need to test the guardrails we put up to get that structure can be tested using more traditional approaches. This again is something Hamel Husain talks about in “Your AI Product Needs Evals” when he makes the case for unit tests https://hamel.dev/blog/posts/evals/#level-1-unit-tests.

As an example, we wanted to ensure that the LLM, when prompted, would always return the results in a JSON format. The response would come back as a plain string, but as long as nothing but JSON was within the string, we could parse it into an object. Like so:

{

"example": "value"

}

However, we found that, intermittently, the LLM would return the JSON inside some markdown text wrapped around it like so:

```json

{

"example": "value"

}

```

This formatting would cause the parsing to fail, and the prototype to fall over. This is where unit testing could prove useful. Given that when we are evaluating our prompt with a large data set of examples, of which any of those could result in the bad JSON/Markdown output, it would make sense to have unit tests that check that the output is always in JSON format. This was a small revelation to me. Not everything that you want an LLM to do is indeterministic. Sure, we want to have dynamic values added within the JSON object, but we want the format structure to stay the same. Unit testing can help provide that fast feedback loop of when the static aspects of an LLM response break out of our guard rails. So that we can rapidly improve our prompts to prevent further issues from occurring.

5. LLMs are a piece of the puzzle and not the whole

Finally, one thing that was abundantly clear when it came to the demonstrations at the end of the hackathon is that LLMs may provide some of the core functionality of an ‘AI-driven product’, but a lot of what supports it and connects it to our context is the same. When LLMs exploded on the scene there was a gold rush to productise them, with people attempting to build a business around what was a collection of prompts. However, things seem to have settled somewhat and those that have successfully utilised LLMs have used them as a part of a bigger whole. Acting as assistants to speed up specific tasks within a wider workflow.

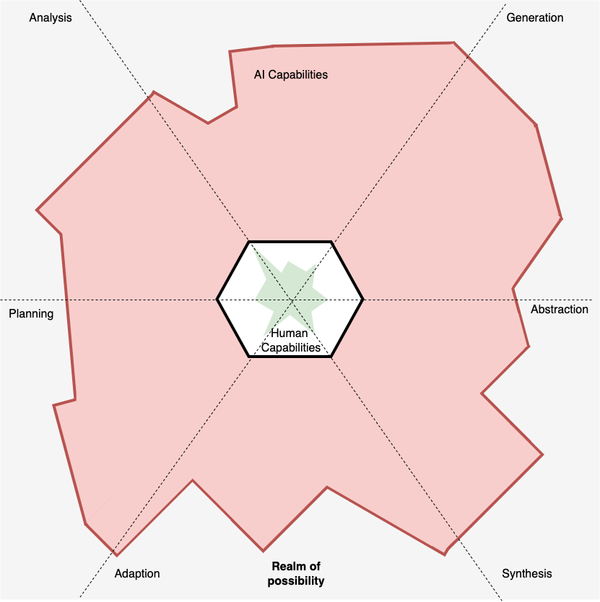

This human-centric design is important to keep in mind for Quality Engineers because it reminds us that we have to focus on the product as a whole and not get distracted by the shiny AI toys within it. Being an advocate for the user is a key aspect of quality engineering and this is more vital than ever when working with AI. We want to encourage that balance between the human and AI, allowing the AI to move quickly, but the human to be part of the feedback loop when things go wrong. To help guide us towards improving the quality of whatever AI-backed product we’re building.

Conclusion

There were also a lot of fun technical things that I learnt that have found their way into AI-Assisted Testing that I hope to share more about in the future. But my overall observation of the hackathon was that whilst the technology may feel different a lot stays the same in how a team works together to deliver a solution. Collaboration and deep analysis of a problem space are essential, and as Quality engineers, we have the skills to encourage and facilitate that behaviour. It can feel scary working with new technology or skill sets, but sometimes we find that once we dive into a new context there is a lot that feels the same. It’s still people making software after all.