Analysing the impact of generative AI using the Laws of Media

How can a mental model from the 70s help us to evaluate the impact of generative AI?

Recently, I shared my thoughts on how generative AI might impact quality engineering roles focusing on market trends and the role of a Quality engineer. This time, however, I want to revisit the topic from a more abstract, philosophical perspective using a mental model from the 1970s. Exploring how generative AI might improve, hinder and change our roles more subtly than we expect.

McLuhan’s Laws of Media

The model we will be using is the ‘Tetrad of media effects’, also sometimes known as the ‘Laws of Media’, which was created by the Philosopher Marshall McLuhan. I was introduced to the model at CASTx 2017 by Michael Bolton who had written about using the model to understand testing in his article: McLuhan for Testers. The model allows us to explore any form of media in different ways to reveal how said media might impact individuals and society. Which raises the question, what do we mean by ‘media’?

In McLuhan’s work, he defined media as any technology that extends our ability. Meaning that anything from social media and the internet to the written word and glasses can be considered as media. They extend our abilities to communicate, observe and interact with the world. So, therefore, we can consider generative AI as a media because it can extend our abilities to create, transform and learn.

As generative AI can be considered a media, we can apply the Laws of media to generative AI to help us ask the following four questions:

- Enhance: What does generative AI enhance in the field of quality engineering?

- Obsolesces: What does generative AI make obsolete in the field of quality engineering?

- Retrieves: What concepts, previously obsolete, does generative AI retrieve in the field of Quality engineering?

- Reverses: What can generative AI reverse or flip in the field of Quality engineering if pushed to its extreme

These four questions make up the Tetrad of media effects and the goal is for us to reflect on each of these questions and see what we come up with. The results of which can help us to understand further how Quality engineering might change in the world of generative AI.

Exploring generative AI with the Laws of Media

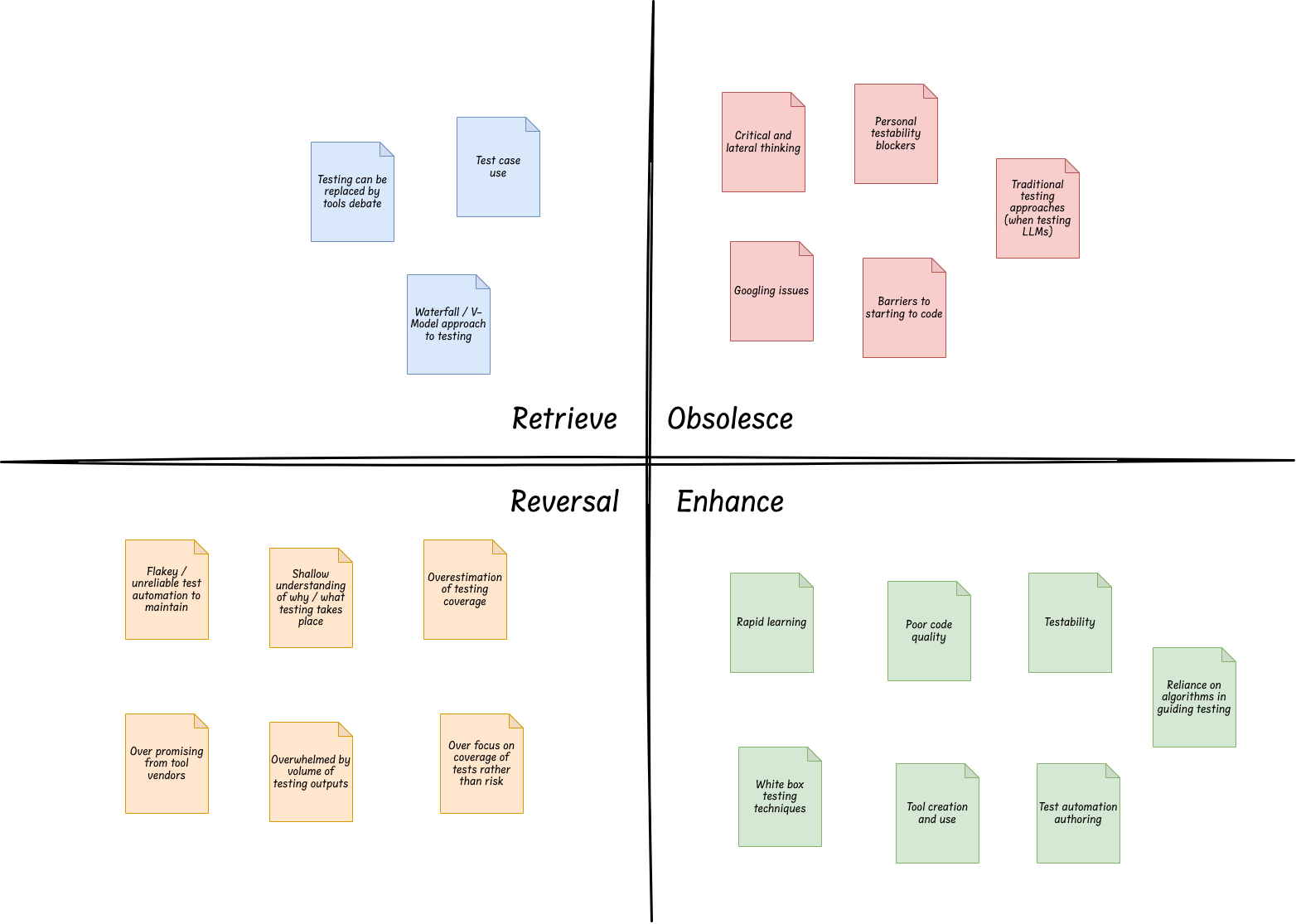

I started by creating a board, adding in the four quadrants of the Laws of Media, and then went through each of in the following order: Retrieve, Extends, Reverse and Obsolesces. The results of which can be found in the board below.

I’ve only added a handful into each section to demonstrate both the types of impact we might expect and the value of utilising the Laws of Media. However, if we dive into each section there’s a lot we can learn.

Retrieve

Beginning with the retrieve quadrant is a useful place to start because it helps us to consider familiar topics, concepts and media to the media in question. But it can also be the trickiest to add items to, hence why there are less in this quadrant compared to the others. McLuhan suggests that by starting here, the items we retrieve can help us to consider ideas for the other quadrants and that was certainly the case for me.

To kick this quadrant off I spent some time considering the wave of AI testing tools that have cropped up recently in the light of generative AI boom and what became apparent to me was that old conversations were coming back. Firstly, the Testing can be replaced by tools debate seems to be back in full swing. These types of conversations are cyclical and familiar. I’ve experienced multiple times tool vendors claiming their tools can replace testing. Which is then followed by a pushback, then reality biting back and vendors reigning in their claims. It seems with the perceived ‘magic’ of AI, vendors are appearing to claim, yet again, that the efficacy of their tools is equivalent to human-led testing.

But why is this the case? Unlike previous cycles which have been focused on a tool’s ability to interact with a system, the focus seems to be more on how it they generate tests. This brought me to the second post-it Test case use. Most of the tools I’ve seen that use generative AI demonstrate their ability to rapidly generate test cases. All of these are written in a way that gives the impression that they have been written by a human, generated on mass. It feels like users of these tools will end up in a situation in which their use of test cases will be accelerated as the cost to generate them is lowered (We’ll explore whether that is a good thing or not shortly).

Finally, these tools need context to generate test cases successfully, which for a lot of people means requirements. A lot of AI testing tools are offering features in which they can not just generate test cases, but also requirements. Reading raw documentation to create requirements that can then be used to build test cases. What strikes me is how much this tool approach is rooted in a waterfall / v-model mindset, and as McLuhan once said:

We Shape Our Tools, and Thereafter Our Tools Shape Us

This is why I added for my final post it Waterfall / V-Model approach to testing. This generative approach to creating requirements then test cases feels very familiar to the waterfall approach and there is potential that utilising these tools will influence us to move back to that approach. Of course, not everyone will. But those who don’t necessarily think critically about how these tools influence us are more at risk of adopting approaches that are more of a step back than a step forward in quality engineering and testing techniques.

Extends

Next, I turned my attention to the ‘extend’ quadrant. What is interesting about this area is that it’s agnostic to whether a media is extending us positively or negatively. That means when considering how generative AI extends our ability I raised positive extensions such as:

- Rapid learning - We can use generative AI to teach us new information and clarify concepts that can help us quickly understand a context.

- Test automation authoring - Utilising generative AI to create elements of our automation for us. Such as boilerplate code or test data.

- White box testing - Generative AI can help extend our abilities to understand how code works and risks to consider by analysing said code for us.

But generative AI also can enhance bad patterns and practices. This is why I raised the following, more negative, extensions:

- Reliance on algorithms - Whilst generative AI can be useful, we run the risk of being too over-trusting of the results. Meaning we may be misinformed or misled in our testing.

- Poor code quality - Copilot tools can help aid development, but their suggestions are only as good as the examples an AI has been trained on. There’s a lot of bad code out there, which might impact Copilot’s recommendations. Resulting in products being built with poor-quality code.

Investigating ‘extend’ once again highlights that whilst generative AI can provide real value, we need to be aware of the potential pitfalls. This is one of the main points I make in Software Testing with Generative AI. There is a lot of value to be reaped from the use of these tools, but we must develop a mindset of healthy scepticism so that we aren’t misled when we use them.

Reverses

The ‘reverse’ quadrant allows us to explore the ‘extend’ quadrant to an extreme to see how the overuse of a media can have a negative impact. We’ve already hinted at how generative AI might hinder us in the ‘extend’ quadrant. So we can continue down this path to consider what it might look like for an individual or team if they overused generative AI.

The items that I’ve added can be split into two categories. Firstly, tool-focused issues such as flakey/unreliable automation to maintain, caused by poor code quality from an AI, overwhelmed by volume of testing outputs, caused by the over generation of automated tests or test cases, and over-promising from tool vendors, caused by a misunderstanding of a tools ability and the role of quality engineering.

The second category is the impact on our perception of our testing. An overuse of generative AI might encourage a shallow understanding of why / what testing takes place. If we wrongly assume that testing is just the execution of test cases, then an AI that is asked to exhaustively create tests, which leads to an over focus on coverage of tests rather than risks, will serve as an echo chamber. Reinforcing incorrect perceptions of testing and resulting in an overestimation of testing coverage.

These observations serve as cautionary tales towards the use of generative AI. However, this is the nature of exploring the overuse of any media. It’s important to remember that all tools can reverse us if overused. So we should always be mindful of using tools when they are needed and not for every occasion.

Obsolesces

Finally, we can consider what tools, habits and concepts might be made redundant by generative AI. Again, this quadrant is agnostic. This means that the action of Googling issues could be perceived as a positive thing, as we can get more targeted answers to our questions, or a negative thing, as we could argue there is less connection with the community and experts and more exposure to hallucinations.

Therefore, in the more positive camp, there are things in which generative AI can help remove. Such as barriers to starting to code, allowing users to have generative AI educate and generate code for them, and personal testability blockers, where generative AI can help give us the answers quickly in a way that is easier to parse (depending on our learning style).

Of course, the big concern is that incorrect use of generative AI could lead to individuals relying less on critical and lateral thinking. Deferring our creative and critical thinking to AI is, of course, a bad idea. That’s why in Software Testing with Generative AI I suggest we adopt a model known as the ‘Area of effect model’. This encourages us to always think about how generative AI can extend the work we do, rather than replace it. Meaning we remain control in of the direction of how we use generative AI rather than deferring the direction and planning to tools that aren’t built for that type of work.

Conclusion

Reflecting on the ideas I added into each quadrant it could be argued that I am more on the pessimistic side of generative AI use, but that’s not the case. I believe a healthy scepticism of what a tool can do is essential. As is being aware of the potential pitfalls. It helps me to be more cautious and selective in how to use them. Which results in me being more successful when using said tools. The quadrants are also by no means complete and there is much more that can be added, but hopefully what this exercise demonstrates is the power of the Laws of media. It can be applied to any types of media we utilise in Quality engineering to help highlighting the subtle ways in which they impact us. Just as we’ve discovered the ways generative AI may influence us.

This is by no means a comprehensive review, and it’s my rooted in my own experience. But the Laws of Media are accessible to anyone, so to conclude this short series of posts about the impact of AI on quality engineering I invite you to try using this model yourself. By using this model you can dive deeper into what the influence of AI, or any other type of media, might have on your role. Helping you form your own opinions rather than taking others, including mine, as truth.