How Can Gen AI Be Used In Shift Left Activities?

Learn how AI can help improve our understanding of features and our ability to slice work into manageable chunks.

Welcome back to another in a series of posts that follow up on questions I’ve been asked during AMA sessions on Generative AI and testing. If you want me to discuss Generative AI, testing, and Quality engineering with your team, I offer free, one-hour, online ‘Ask me anything’ sessions for teams. Reach out to me on LinkedIn if you want to talk more.

This week’s question is:

What’s the view on engaging AI accelerating Shift Left strategies?

I’ve spoken in previous posts about how the current focus of Gen AI in testing has been on test cases, and I feel this is a great question to ask. So let’s dig into how we can expand our thinking into the application of Gen AI into activities before testing a system begins.

Mindset

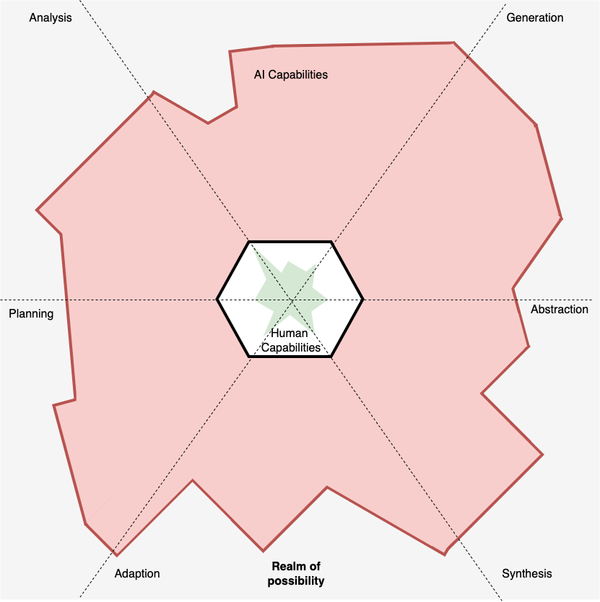

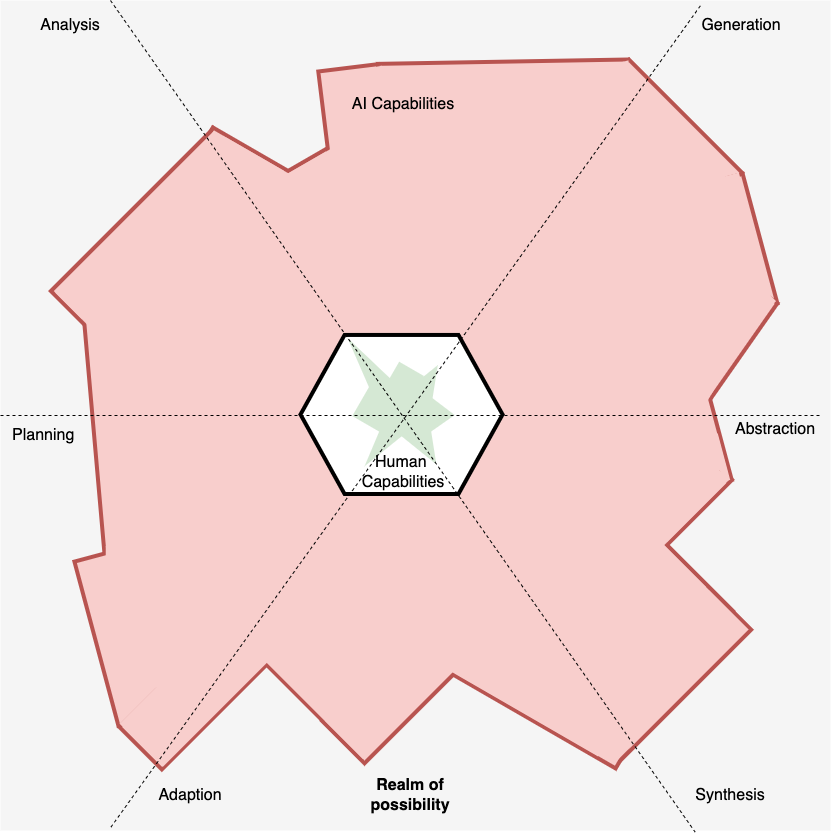

Before we dig into the question of Gen AI and shift left, let’s talk about the relationship between us and tools. The success of tool use is much more dependent on having the right mindset around how tools help us than our technical ability to use said tools. So we must appreciate that we get better value out of a tool when we employ it to aid us rather than replace us. I summarise this as an area of effect model in Software Testing with Generative AI, which I have updated in a recent post.

What this model is communicating is that I, as an individual, set the direction and I determine what is the right course of action. The tooling, in this case, Generative AI, is in place to help extend and enhance my abilities.

This mindset is very important when it comes to using Gen AI during shift left activities because we’re dealing with ideas, thoughts and emotions. We’re relying on Gen AI to be creative in the context of helping us to understand and critique ideas. However, because Gen AI is trained on giant, generalised corpora of data. They are neither able to dig into the subtext of what we’re discussing during a shift left activity, such as three amig@s, nor to understand our context in a detailed manner.

This isn’t a criticism of Gen AI per se, but we should be mindful of what we put into an LLM and what we get out. If we put a shallow prompt in, we get a shallow response back. Therefore, when using Gen AI to help support the exploration of ideas and requirements, we need a strong grounding in what is being explored first and then use prompting techniques to frame how we want Gen AI to help us and provide the necessary context for it to process. We also need to treat all responses with healthy scepticism so we pick what is useful and ignore the rest. To help us better appreciate this mindset, let’s look at a few examples.

Improving your testability

For me, the goal of shift left testing is to establish a shared understanding across my team. A shared understanding allows us to identify potential issues earlier and minimise rework. This is why we have collaborative discussions. We as testers and QE need to help facilitate and lead conversations so that we can achieve our stated goal of shared understanding. This means we also need to have an understanding of what’s being discussed on both a technical and business basis. In other words, our intrinsic testability impacts our ability to carry out shift left testing effectively.

By intrinsic testability, we mean our knowledge of the product we’re building and the problem we’re solving (Taken from Ash and Rob’s Ten P’s of Testability). If we have a deep technical understanding of how our product is built and the technology it uses, then facilitating a conversation with our team is easier. But if we don’t know the details of the discussion going into the collaborative sessions, then our effectiveness is diminished. Therefore, we want to be as informed as possible, and this is where Gen AI has the potential to help.

Act as a Javascript/Typescript developer. Explain how the code delimited by the tag <example> works. The audience is someone who is experienced with software development but is not necessarily technically savvy. Check that your explanation of the software is relevant to the audience and relates directly to the code before outputting a response.

<example>

// I put my code in here

<example>Notice how the prompt isn’t just asking how the code works but is also communicating who the audience is and what their level of experience is. This helps tune the response so that it’s relevant and valuable to us. Using a prompt like this we can quickly familiarise ourselves with aspects of our product. Arming us with the right knowledge and language to help facilitate our testing and any future discussions with our team.

It’s worth mentioning though that we should be careful not to take everything at face value. Especially if we lack the personal oracles to sense and check responses for hallucinations. If required we should always validate our learning and understand that collaboration can be a great way to clarify your understanding further.

Assisting with complexity

Another approach we can take with Gen AI is to use it as an assistant when analysing and slicing work. One of the key drivers of successful continuous delivery is being able to release work in smaller chunks that are sliced correctly. This enables a smoother development process, creating work that is quick to build, test, release and get feedback on. However, to achieve this, we need to be able to understand requirements deeply and sensibly slice them. Which requires experience and skills. The process is something we should own as a team, but that’s not to say we can’t leverage AI to assist this process to either shake up our thinking or perhaps validate our thinking.

For example, consider this user story with acceptance criteria:

In order to ensure a booking is secured

As a guest

I want the ability to pay at the point of booking

Feature: User booking and payment

Scenario: The one where the user makes a booking and pays

Given I am on the homepage

When I select a room and make a booking

And I provide valid card payment details

Then I receive confirmation that my booking has been made

Scenario: The one where the user makes a booking and opts to pay later

Given I am on the homepage

When I select a room and make a booking

And I select the option to pay later

Then I receive confirmation that my booking has been made along with details of when payment is due

Scenario: The one where the user cancels a booking

Given I am on the homepage

When I select a room and make a booking

And I opt to cancel my payment

Then no booking is made and I receive no confirmation

Scenario: The one where the user gets their payment rejected

Given I am on the homepage

When I select a room and make a booking

And I provide incorrect card details to make a payment

Then I am asked to provide correct card details

And no booking is made and I receive no confirmation

With this story in place, we can add it to the following prompt to start a chat that can help guide us:

Acting as a product engineer, you will be provided a user story with acceptance scenarios. Your tasks is two fold:

1. Ask questions to clarify areas of the user story that aren't clear and suggest changes or additions to the user story.

2. Once requested later in that chat, slice the story into smaller manageable sections of work that the development team can work.

The story is for a team that it is developing features for a bed & breakfast booking site. The goal is to create a product that can be administrated by bed & breakfast owners and provides guests with a smooth process to research and book rooms. The team consists of two backend developers, one front-end, a quality engineer, a business analyst and a product owner.

Here is the user story:

<Enter user story here>To demonstrate the prompt and subsequent conversation, you can view a conversation I had with Claude here.

Reflection on the interaction, there are a few notable points:

- It generated a considerable list of questions that required answers. Some of them were useful, such as the questions about confirmation pages, and some were less so, such as what booking details are required. When using AI in this way, we don’t necessarily have to answer every question; we can pick and choose what is useful to us and what is not.

- Claude can expand the user story to incorporate the additional details that came out of the questioning. Admittedly, the initial format of the user story felt too imperative for my tests, but a quick correction during the conversation helped to improve the output. Again, we don’t have to accept this output, but it sped the process of putting together an updated feature file. This could be further tweaked outside of the AI use.

- Finally, it was able to propose a strategy for slicing the work up. Whether we agree with this slicing is up to us. But again it serves as a foundation to spark conversation and determine the next steps.

So, as we can see, Gen AI can help provide an alternative perspective, but it’s important to always keep in mind that it is just one additional opinion. Noa t fact. Similar to the testability example, we should maintain a healthy scepticism and not take everything the Gen AI responds with as gospel. Instead, picking and choosing what we believe is valuable and leaving the rest.

Conclusion

What these examples demonstrate is that we’re using Gen AI to support actions that we do during shift left testing to help extend and enhance our analysis. By using the area of effect model, we understand that the context knowledge and direction come from us and that Gen AI is being used to help shake up our biases and get us to change our perspectives during our analysis. By doing this, we can utilise Gen AI as an assistant to bolster our work in a way that allows us to determine what responses are acceptable and useful from an LLM and what is not. What makes this model so useful is that it can be applied across the continuous testing model, something I cover in Software Testing with Generative AI, but it can also be applied to non-AI-based testing tools. So whatever tooling you’re using, ask yourself the question of how it is enhancing your work. If you can’t answer that, then maybe it’s time to change your approach.

If you enjoyed this post and would like me to join your team to discuss Generative AI, testing and quality engineering, I offer a free, one-hour, online ‘Ask me anything’ for you and your team. Reach out to me on LinkedIn if you want to talk more.